零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> Object-C 基础零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> Object-C 线程

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> OpenGL ES

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> GPUImage

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> AVFoundation

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> CocoaPods

一.前言

1.AVAsset

Assets 可以来自一个文件或用户的相册,可以理解为多媒体资源,通过 URL 作为一个 asset 对象的标识. 这个 URL 可以是本地文件路径或网络流;

2.AVAssetTrack

AVAsset 包含很多轨道 AVAssetTrack的结合,如 audio, video, text, closed captions, subtitles…

3.AVComposition / AVMutableComposition

使用 AVMutableComposition 类可以增删 AVAsset 来将单个或者多个 AVAsset 集合到一起,用来合成新视频。除此之外,若想将集合到一起的视听资源以自定义的方式进行播放,需要使用 AVMutableAudioMix 和 AVMutableVideoComposition 类对其中的资源进行协调管理;

4.AVMutableVideoComposition

AVFoundation 类 API 中最核心的类是 AVVideoComposition / AVMutableVideoComposition 。

AVVideoComposition / AVMutableVideoComposition 对两个或多个视频轨道组合在一起的方法给出了一个总体描述。它由一组时间范围和描述组合行为的介绍内容组成。这些信息出现在组合资源内的任意时间点。

AVVideoComposition / AVMutableVideoComposition 管理所有视频轨道,可以决定最终视频的尺寸,裁剪需要在这里进行;

5.AVMutableCompositionTrack

AVMutableCompositionTrack 是将多个 AVAsset 集合到一起合成新视频中轨道信息,有音频轨、视频轨等,里面可以插入各种对应的素材(画中画,水印等);

6.AVMutableVideoCompositionLayerInstruction

AVMutableVideoCompositionLayerInstruction 主要用于对视频轨道中的一个视频处理缩放、模糊、裁剪、旋转等;

7.AVMutableVideoCompositionInstruction

表示一个指令,决定一个 timeRange 内每个轨道的状态,每一个指令包含多个 AVMutableVideoCompositionLayerInstruction ;而 AVVideoComposition 由多个 AVVideoCompositionInstruction 构成;

AVVideoCompositionInstruction 所提供的最关键的一段数据是组合对象时间轴内的时间范围信息。这一时间范围是在某一组合形式出现时的时间范围。要执行的组全特质是通过其 AVMutableVideoCompositionLayerInstruction 集合定义的。

8.AVAssetExportSession

AVAssetExportSession 主要用于导出视频;

9.AVAssetTrackSegment

AVAssetTrackSegment 不可变轨道片段;

10.AVCompositionTrackSegment

AVCompositionTrackSegment 可变轨道片段,继承自 AVAssetTrackSegment;

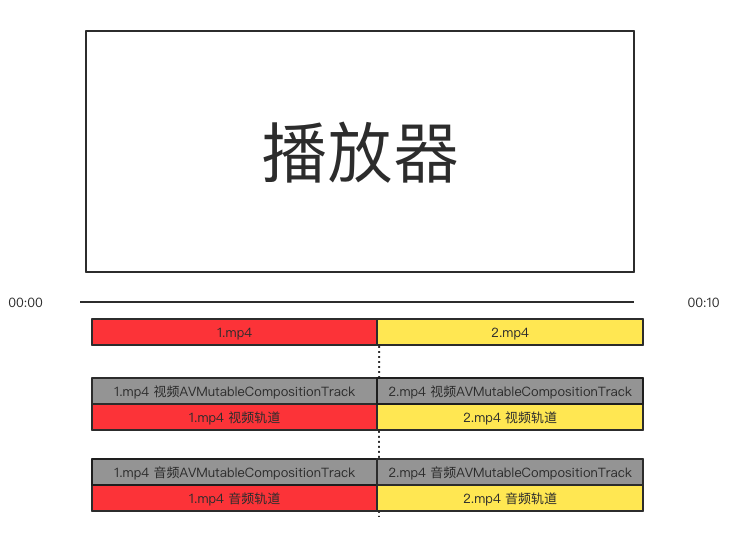

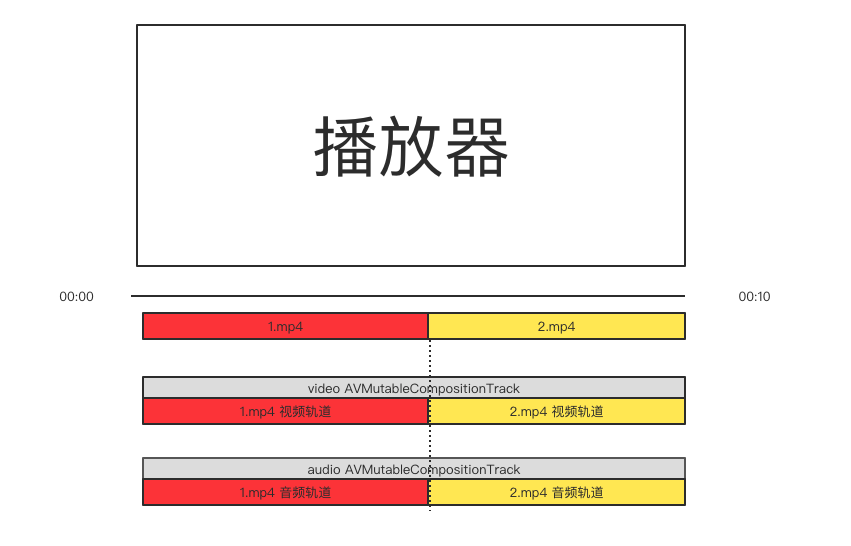

二.多个视频合并流程简介

![图片[1]-AVFoundation – 将多个媒体合并(二) – 一个轨道多个视频无缝衔接-猿说编程](https://www.codersrc.com/wp-content/uploads/2021/07/25f9e794323b453.png)

AVComposition 继承自 AVAsset,将来自多个基于源文件的媒体数据组合在一起显示,或处理来自多个源媒体数据;

AVMutableComposition *mutableComposition = [AVMutableComposition composition];

//进行添加资源等操作

//1.添加媒体1的视频轨道

//2.添加媒体1的音频轨道

//3.添加媒体2的视频轨道

//4.添加媒体2的音频轨道

//.....

//使用可变的 composition 生成一个不可变的 composition 以供使用

AVComposition *composition = [myMutableComposition copy];

AVPlayerItem *playerItem = [[AVPlayerItem alloc] initWithAsset:composition];三.多个视频合并流程

为了避免导出失败,解决方案是提取视频资源 AVURLAsset 音频轨道和视频轨道,重新构建 AVComposition ,然后在使用 AVAssetExportSession 导出;

其中将多个 AVURLAsset 重新构建 AVComposition 时,可以有两种思路:

1.每个 AVURLAsset 单独使用一个音频或者视频轨道;

2.所有 AVURLAsset 共用一个音频或者视频轨道;

所有 AVURLAsset 共用一个音频或者视频轨道,示例代码如下:

/******************************************************************************************/

//@Author:猿说编程

//@Blog(个人博客地址): www.codersrc.com

//@File:AVFoundation – 将多个媒体合并(二) - 一个轨道多个视频无缝衔接

//@Time:2021/08/21 07:30

//@Motto:不积跬步无以至千里,不积小流无以成江海,程序人生的精彩需要坚持不懈地积累!

/******************************************************************************************/

#import "ViewController.h"

#import <AVFoundation/AVFoundation.h>

@interface ViewController ()

{

}

@end

@implementation ViewController

- (void)viewDidLoad

{

[super viewDidLoad];

int width = self.view.bounds.size.width;

int heigt = self.view.bounds.size.height;

// [self exportMove];

[self exportMove];

}

#pragma mark - 获取视频角度

- (int)degressFromVideoFileWithAsset:(AVAsset *)asset {

int degress = 0;

NSArray *tracks = [asset tracksWithMediaType:AVMediaTypeVideo];

if([tracks count] > 0) {

AVAssetTrack *videoTrack = [tracks objectAtIndex:0];

CGAffineTransform t = videoTrack.preferredTransform;

if(t.a == 0 && t.b == 1.0 && t.c == -1.0 && t.d == 0){

// Portrait

degress = 90;

} else if(t.a == 0 && t.b == -1.0 && t.c == 1.0 && t.d == 0){

// PortraitUpsideDown

degress = 270;

} else if(t.a == 1.0 && t.b == 0 && t.c == 0 && t.d == 1.0){

// LandscapeRight

degress = 0;

} else if(t.a == -1.0 && t.b == 0 && t.c == 0 && t.d == -1.0){

// LandscapeLeft

degress = 180;

}

}

return degress;

}

#pragma mark - 导出

- (void)startExportMP4VideoWithVideoAssets:(NSArray *)videoArrays presetName:(NSString *)presetName success:(void (^)(NSString *outputPath))success failure:(void (^)(NSString *errorMessage, NSError *error))failure {

AVMutableComposition *composition = [AVMutableComposition composition];

__block CMTime insertTime = kCMTimeZero;

__block AVMutableCompositionTrack *compositionVideoTrack = [composition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

__block AVMutableCompositionTrack *compositionAudioTrack = [composition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

[videoArrays enumerateObjectsUsingBlock:^(id _Nonnull obj, NSUInteger idx, BOOL * _Nonnull stop) {

AVURLAsset* videoAsset = [videoArrays objectAtIndex:idx];

AVAssetTrack *sourceVideoTrack = [videoAsset tracksWithMediaType:AVMediaTypeVideo].firstObject;

AVAssetTrack *sourceAudioTrack = [videoAsset tracksWithMediaType:AVMediaTypeAudio].firstObject;

[compositionVideoTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, videoAsset.duration) ofTrack:sourceVideoTrack atTime:insertTime error:nil];

[compositionAudioTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, videoAsset.duration) ofTrack:sourceAudioTrack atTime:insertTime error:nil];

insertTime = CMTimeAdd(insertTime, videoAsset.duration);

}];

NSArray *presets = [AVAssetExportSession exportPresetsCompatibleWithAsset:composition];

if ([presets containsObject:presetName]) {

AVAssetExportSession *session = [[AVAssetExportSession alloc] initWithAsset:composition presetName:presetName];

// 输出地址

NSDateFormatter *formater = [[NSDateFormatter alloc] init];

[formater setDateFormat:@"yyyy-MM-dd-HH:mm:ss-SSS"];

NSString *outputPath = [NSHomeDirectory() stringByAppendingFormat:@"/tmp/video-%@.mp4", [formater stringFromDate:[NSDate date]]];

session.outputURL = [NSURL fileURLWithPath:outputPath];

// 优化

session.shouldOptimizeForNetworkUse = YES;

NSArray *supportedTypeArray = session.supportedFileTypes;

if ([supportedTypeArray containsObject:AVFileTypeMPEG4]) {

session.outputFileType = AVFileTypeMPEG4;

} else if (supportedTypeArray.count == 0) {

if (failure) {

failure(@"该视频类型暂不支持导出", nil);

}

NSLog(@"No supported file types 视频类型暂不支持导出");

return;

} else {

session.outputFileType = [supportedTypeArray objectAtIndex:0];

}

if (![[NSFileManager defaultManager] fileExistsAtPath:[NSHomeDirectory() stringByAppendingFormat:@"/tmp"]]) {

[[NSFileManager defaultManager] createDirectoryAtPath:[NSHomeDirectory() stringByAppendingFormat:@"/tmp"] withIntermediateDirectories:YES attributes:nil error:nil];

}

//旋转角度相关

AVMutableVideoComposition *videoComposition = [self fixedCompositionWithAsset:videoArrays];

if (videoComposition.renderSize.width) {

// 修正视频转向

session.videoComposition = videoComposition;

}

// 合成完毕

[session exportAsynchronouslyWithCompletionHandler:^{

dispatch_async(dispatch_get_main_queue(), ^{

switch (session.status) {

case AVAssetExportSessionStatusUnknown: {

NSLog(@"AVAssetExportSessionStatusUnknown");

} break;

case AVAssetExportSessionStatusWaiting: {

NSLog(@"AVAssetExportSessionStatusWaiting");

} break;

case AVAssetExportSessionStatusExporting: {

NSLog(@"AVAssetExportSessionStatusExporting");

} break;

case AVAssetExportSessionStatusCompleted: {

NSLog(@"AVAssetExportSessionStatusCompleted");

if (success) {

success(outputPath);

}

} break;

case AVAssetExportSessionStatusFailed: {

NSLog(@"AVAssetExportSessionStatusFailed");

if (failure) {

failure(@"视频导出失败", session.error);

}

} break;

case AVAssetExportSessionStatusCancelled: {

NSLog(@"AVAssetExportSessionStatusCancelled");

if (failure) {

failure(@"导出任务已被取消", nil);

}

} break;

default: break;

}

});

}];

} else {

if (failure) {

NSString *errorMessage = [NSString stringWithFormat:@"当前设备不支持该预设:%@", presetName];

failure(errorMessage, nil);

}

}

}

#pragma mark - 获取优化后的视频转向信息

- (AVMutableVideoComposition *)fixedCompositionWithAsset:(NSArray *)videoAssets {

AVMutableVideoComposition *videoComposition = [AVMutableVideoComposition videoComposition];

__block NSMutableArray* instructions = [NSMutableArray array];

[videoAssets enumerateObjectsUsingBlock:^(id _Nonnull obj, NSUInteger idx, BOOL * _Nonnull stop) {

AVAsset *videoAsset = [videoAssets objectAtIndex:idx];

int degrees = [self degressFromVideoFileWithAsset:videoAsset];

if (degrees != 0) {

CGAffineTransform translateToCenter;

CGAffineTransform mixedTransform;

videoComposition.frameDuration = CMTimeMake(1, 30);

NSArray *tracks = [videoAsset tracksWithMediaType:AVMediaTypeVideo];

AVAssetTrack *videoTrack = [tracks objectAtIndex:0];

AVMutableVideoCompositionInstruction *roateInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

roateInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, [videoAsset duration]);

AVMutableVideoCompositionLayerInstruction *roateLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:videoTrack];

if (degrees == 90) {

// 顺时针旋转90°

translateToCenter = CGAffineTransformMakeTranslation(videoTrack.naturalSize.height, 0.0);

mixedTransform = CGAffineTransformRotate(translateToCenter,M_PI_2);

videoComposition.renderSize = CGSizeMake(videoTrack.naturalSize.height,videoTrack.naturalSize.width);

[roateLayerInstruction setTransform:mixedTransform atTime:kCMTimeZero];

} else if(degrees == 180){

// 顺时针旋转180°

translateToCenter = CGAffineTransformMakeTranslation(videoTrack.naturalSize.width, videoTrack.naturalSize.height);

mixedTransform = CGAffineTransformRotate(translateToCenter,M_PI);

videoComposition.renderSize = CGSizeMake(videoTrack.naturalSize.width,videoTrack.naturalSize.height);

[roateLayerInstruction setTransform:mixedTransform atTime:kCMTimeZero];

} else if(degrees == 270){

// 顺时针旋转270°

translateToCenter = CGAffineTransformMakeTranslation(0.0, videoTrack.naturalSize.width);

mixedTransform = CGAffineTransformRotate(translateToCenter,M_PI_2*3.0);

videoComposition.renderSize = CGSizeMake(videoTrack.naturalSize.height,videoTrack.naturalSize.width);

[roateLayerInstruction setTransform:mixedTransform atTime:kCMTimeZero];

}

roateInstruction.layerInstructions = @[roateLayerInstruction];

[instructions addObject:roateInstruction];

}

}];

// 加入视频方向信息

videoComposition.instructions = instructions;

return videoComposition;

}

#pragma mark - 导出函数

-(BOOL)exportMove

{

//加载媒体

NSURL* srcVideo = [NSURL fileURLWithPath:[[NSBundle mainBundle] pathForResource:@"789.MP4" ofType:nil]];

AVURLAsset* videoAsset = [[AVURLAsset alloc] initWithURL:srcVideo options:nil];

// NSURL* srcVideo1 = [NSURL fileURLWithPath:[[NSBundle mainBundle] pathForResource:@"123.MOV" ofType:nil]];

// AVURLAsset* videoAsset1 = [[AVURLAsset alloc] initWithURL:srcVideo1 options:nil];

NSArray* videoArrays = [[NSArray alloc] initWithObjects:videoAsset,videoAsset, nil];

// 查找当前媒体支持的presetName

NSArray *presets = [AVAssetExportSession exportPresetsCompatibleWithAsset:videoAsset];

NSLog(@"%@",presets);

if ([presets containsObject:AVAssetExportPreset640x480])

{

//导出视频文件到沙盒目录

[self startExportMP4VideoWithVideoAssets:videoArrays presetName:AVAssetExportPreset640x480 success:^(NSString *outputPath) {

NSLog(@" startExportMP4VideoWithVideoAssets success,path:%@",outputPath);

} failure:^(NSString *errorMessage, NSError *error) {

NSLog(@"startExportMP4VideoWithVideoAssets fail");

}];

}

else

{

NSLog(@"exportMove fail");

}

return YES;

}

@end

遗留bug:如果是两个分辨率比例不同的视频会提示导出失败;

温馨提示:上面工程源码可通过网站右上角立即购买获取下载地址即可!

四.猜你喜欢

- AVAsset 加载媒体

- AVAssetTrack 获取视频 音频信息

- AVMetadataItem 获取媒体属性元数据

- AVAssetImageGenerator 截图

- AVAssetImageGenerator 获取多帧图片

- AVAssetExportSession 裁剪/转码

- AVPlayer 播放视频

- AVPlayerItem 管理资源对象

- AVPlayerLayer 显示视频

- AVQueuePlayer 播放多个媒体文件

- AVComposition AVMutableComposition 将多个媒体合并

- AVVideoComposition AVMutableVideoComposition 管理所有视频轨道

- AVCompositionTrack AVMutableCompositionTrack 添加移除缩放媒体音视频轨道信息

- AVAssetTrackSegment 不可变轨道片段

- AVCompositionTrackSegment 可变轨道片段

- AVVideoCompositionInstruction AVMutableVideoCompositionInstruction 操作指令

- AVMutableVideoCompositionLayerInstruction 视频轨道操作指令

- AVFoundation – 将多个媒体合并(二) – 一个轨道多个视频无缝衔接

ChatGPT 3.5 国内中文镜像站免费使用啦

![模拟真人鼠标轨迹算法(支持C++/Python/易语言)[鼠标轨迹API简介]-猿说编程](https://winsdk.cn/wp-content/uploads/2024/11/image-3.png)

暂无评论内容