零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> Object-C 基础零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> Object-C 线程

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> OpenGL ES

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> GPUImage

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> AVFoundation

零基础 Object-C 学习路线推荐 : Object-C 学习目录 >> CocoaPods

一.前言

1.AVAsset

Assets 可以来自一个文件或用户的相册,可以理解为多媒体资源,通过 URL 作为一个 asset 对象的标识. 这个 URL 可以是本地文件路径或网络流;

2.AVAssetTrack

AVAsset 包含很多轨道 AVAssetTrack的结合,如 audio, video, text, closed captions, subtitles…

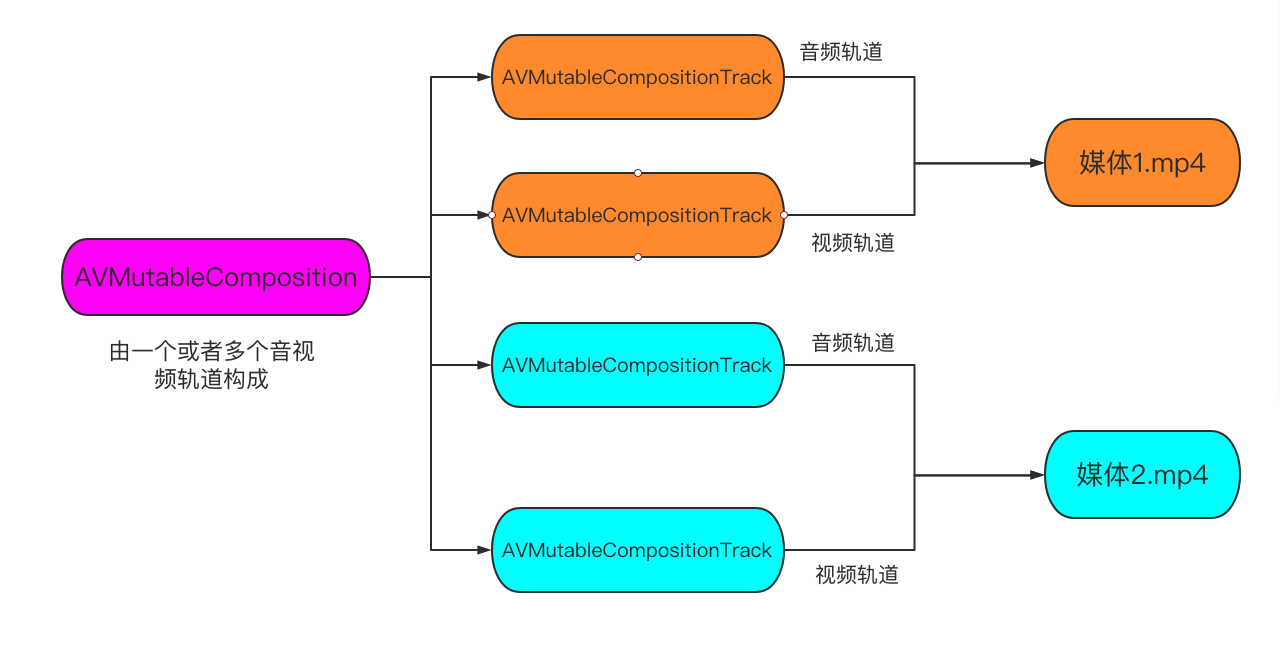

3.AVComposition / AVMutableComposition

使用 AVMutableComposition 类可以增删 AVAsset 来将单个或者多个 AVAsset 集合到一起,用来合成新视频。除此之外,若想将集合到一起的视听资源以自定义的方式进行播放,需要使用 AVMutableAudioMix 和 AVMutableVideoComposition 类对其中的资源进行协调管理;

4.AVMutableVideoComposition

AVFoundation 类 API 中最核心的类是 AVVideoComposition / AVMutableVideoComposition 。

AVVideoComposition / AVMutableVideoComposition 对两个或多个视频轨道组合在一起的方法给出了一个总体描述。它由一组时间范围和描述组合行为的介绍内容组成。这些信息出现在组合资源内的任意时间点。

AVVideoComposition / AVMutableVideoComposition 管理所有视频轨道,可以决定最终视频的尺寸,裁剪需要在这里进行;

5.AVMutableCompositionTrack

AVMutableCompositionTrack 是将多个 AVAsset 集合到一起合成新视频中轨道信息,有音频轨、视频轨等,里面可以插入各种对应的素材(画中画,水印等);

6.AVMutableVideoCompositionLayerInstruction

AVMutableVideoCompositionLayerInstruction 主要用于对视频轨道中的一个视频处理缩放、模糊、裁剪、旋转等;

7.AVMutableVideoCompositionInstruction

表示一个指令,决定一个 timeRange 内每个轨道的状态,每一个指令包含多个 AVMutableVideoCompositionLayerInstruction ;而 AVVideoComposition 由多个 AVVideoCompositionInstruction 构成;

AVVideoCompositionInstruction 所提供的最关键的一段数据是组合对象时间轴内的时间范围信息。这一时间范围是在某一组合形式出现时的时间范围。要执行的组全特质是通过其 AVMutableVideoCompositionLayerInstruction 集合定义的。

8.AVAssetExportSession

AVAssetExportSession 主要用于导出视频;

9.AVAssetTrackSegment

AVAssetTrackSegment 不可变轨道片段;

10.AVCompositionTrackSegment

AVCompositionTrackSegment 可变轨道片段,继承自 AVAssetTrackSegment;

二.多个视频合并流程简介

AVComposition 继承自 AVAsset,将来自多个基于源文件的媒体数据组合在一起显示,或处理来自多个源媒体数据;

AVMutableComposition *mutableComposition = [AVMutableComposition composition];

//进行添加资源等操作

//1.添加媒体1的视频轨道

//2.添加媒体1的音频轨道

//3.添加媒体2的视频轨道

//4.添加媒体2的音频轨道

//.....

//使用可变的 composition 生成一个不可变的 composition 以供使用

AVComposition *composition = [myMutableComposition copy];

AVPlayerItem *playerItem = [[AVPlayerItem alloc] initWithAsset:composition];三.多个视频合并流程

为了避免导出失败,解决方案是提取视频资源 AVURLAsset 音频轨道和视频轨道,重新构建 AVComposition ,然后在使用 AVAssetExportSession 导出;

![图片[2]-AVFoundation – 将多个媒体合并(四) – 不同分辨率媒体合成并自定义分辨率-猿说编程](https://www.codersrc.com/wp-content/uploads/2021/09/cf97212ca3207e9-1024x273.png)

如上图所示,我们将三个视频合并,并导出(导出视频分辨率为 1080 x 1920),示例代码如下:

/******************************************************************************************/

//@Author:猿说编程

//@Blog(个人博客地址): www.codersrc.com

//@File:AVFoundation – 将多个媒体合并(四) – 不同分辨率媒体合成并自定义分辨率

//@Time:2021/09/06 07:30

//@Motto:不积跬步无以至千里,不积小流无以成江海,程序人生的精彩需要坚持不懈地积累!

/******************************************************************************************/

#import "ViewController.h"

#import <AVFoundation/AVFoundation.h>

#import <AVKit/AVKit.h>

- (void)addVideos:(NSArray<NSURL*>*)videos outPath:(NSString*)outPath{

AVMutableComposition *composition = [AVMutableComposition composition];

//用来管理视频中的所有视频轨道

AVMutableVideoComposition *videoComposition = [AVMutableVideoComposition videoComposition];

//输出对象 会影响分辨率

AVAssetExportSession* exporter = [[AVAssetExportSession alloc] initWithAsset:composition presetName:AVAssetExportPresetHighestQuality];

//轨道相关

NSMutableArray<AVAsset*>* assets = [NSMutableArray array];

NSMutableArray<AVMutableCompositionTrack*>* videoCompositionTracks = [NSMutableArray array];

NSMutableArray<AVAssetTrack*>* tracks = [NSMutableArray array];

//资源插入起始时间点

__block CMTime atTime = kCMTimeZero;

__block CMTime maxDuration = kCMTimeZero;

//加载音/视频轨道

[videos enumerateObjectsUsingBlock:^(NSURL* obj, NSUInteger idx, BOOL * _Nonnull stop) {

AVAsset* asset = [AVAsset assetWithURL:obj];

AVAssetTrack* videoTrack = [[asset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

AVAssetTrack* audioTrack = [[asset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0];

//视频轨道容器

AVMutableCompositionTrack* videoCompositionTrack = [composition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

AVMutableCompositionTrack* audioCompositionTrack = [composition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

//插入视频轨道

[videoCompositionTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, asset.duration) ofTrack:videoTrack atTime:atTime error:nil];

//插入音频轨道

[audioCompositionTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, asset.duration) ofTrack:audioTrack atTime:atTime error:nil];

[tracks addObject:videoTrack];

[assets addObject:asset];

[videoCompositionTracks addObject:videoCompositionTrack];

atTime = CMTimeAdd(atTime, asset.duration);

maxDuration = CMTimeAdd(maxDuration, asset.duration);

}];

//获取分辨率

CGSize renderSize = [self getNaturalSize:tracks[0]];

//设置分辨率

videoComposition.renderSize = renderSize;

//可加载多个轨道

videoComposition.instructions = @[[self getCompositionInstructions:videoCompositionTracks tracks:tracks assets:assets maxDuration:maxDuration naturalSize:renderSize]];

//设置视频帧率

videoComposition.frameDuration = videoCompositionTracks[0].minFrameDuration;

//设置速度

videoComposition.renderScale = 1.0;

//exporter设置

exporter.outputURL = [NSURL fileURLWithPath:outPath];

exporter.outputFileType = AVFileTypeQuickTimeMovie;

exporter.shouldOptimizeForNetworkUse = YES;//适合网络传输

exporter.videoComposition = videoComposition;

[exporter exportAsynchronouslyWithCompletionHandler:^{

dispatch_async(dispatch_get_main_queue(), ^{

if (exporter.status == AVAssetExportSessionStatusCompleted) {

NSLog(@"成功 path:%@",outPath);

[self playVideoWithUrl:exporter.outputURL]; //导出成功后播放视频

}else{

NSLog(@"失败--%@",exporter.error);

}

});

}];

}

-(AVMutableVideoCompositionInstruction*)getCompositionInstructions:(NSArray<AVMutableCompositionTrack*>*)compositionTracks tracks:(NSArray<AVAssetTrack*>*)tracks

assets:(NSArray<AVAsset*>*)assets

maxDuration:(CMTime)maxDuration naturalSize:(CGSize)naturalSize{

//资源动画范围

__block CMTime atTime = kCMTimeZero;

NSMutableArray* layerInstructions = [NSMutableArray array];

//视频轨道中的一个视频,可以缩放、旋转等

AVMutableVideoCompositionInstruction *compositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

compositionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, maxDuration);

[compositionTracks enumerateObjectsUsingBlock:^(AVMutableCompositionTrack * _Nonnull compositionTrack, NSUInteger idx, BOOL * _Nonnull stop) {

AVAssetTrack *assetTrack = tracks[idx];

AVAsset *asset = assets[idx];

AVMutableVideoCompositionLayerInstruction *layerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:compositionTrack];

//设置旋转角度

CGAffineTransform transfrom = [self getTransfromFromTrack:assetTrack naturalSize:naturalSize];

[layerInstruction setTransform:transfrom atTime:kCMTimeZero];

//设置透明

atTime = CMTimeAdd(atTime, asset.duration);

[layerInstruction setOpacity:0.0 atTime:atTime];

[layerInstructions addObject:layerInstruction];

}];

compositionInstruction.layerInstructions = layerInstructions;

return compositionInstruction;

}

- (CGAffineTransform)getTransfromFromTrack:(AVAssetTrack*)track naturalSize:(CGSize)naturalSize{

UIImageOrientation assetOrientation = UIImageOrientationUp;

BOOL isPortrait = NO;

CGAffineTransform transfrom = track.preferredTransform;

if(transfrom.a == 0 && transfrom.b == 1.0 && transfrom.c == -1.0 && transfrom.d == 0) {

assetOrientation = UIImageOrientationRight;

isPortrait = YES;

}

if(transfrom.a == 0 && transfrom.b == -1.0 && transfrom.c == 1.0 && transfrom.d == 0) {

assetOrientation = UIImageOrientationLeft;

isPortrait = YES;

}

if(transfrom.a == 1.0 && transfrom.b == 0 && transfrom.c == 0 && transfrom.d == 1.0) {

assetOrientation = UIImageOrientationUp;

}

if(transfrom.a == -1.0 && transfrom.b == 0 && transfrom.c == 0 && transfrom.d == -1.0) {

assetOrientation = UIImageOrientationDown;

}

CGFloat assetScaleToFitRatio = naturalSize.width / track.naturalSize.width;

if(isPortrait) {

assetScaleToFitRatio = naturalSize.width / track.naturalSize.height;

CGAffineTransform assetScaleFactor = CGAffineTransformMakeScale(assetScaleToFitRatio,assetScaleToFitRatio);

transfrom = CGAffineTransformConcat(track.preferredTransform, assetScaleFactor);

}else{

CGAffineTransform assetScaleFactor = CGAffineTransformMakeScale(assetScaleToFitRatio,assetScaleToFitRatio);

transfrom = CGAffineTransformConcat(CGAffineTransformConcat(track.preferredTransform, assetScaleFactor),CGAffineTransformMakeTranslation(0, naturalSize.width/2));

}

return transfrom;

}

- (CGSize)getNaturalSize:(AVAssetTrack*)track{

UIImageOrientation assetOrientation = UIImageOrientationUp;

BOOL isPortrait = NO;

CGAffineTransform videoTransform = track.preferredTransform;

if (videoTransform.a == 0 && videoTransform.b == 1.0 && videoTransform.c == -1.0 && videoTransform.d == 0) {

assetOrientation = UIImageOrientationRight;

isPortrait = YES;

}

if (videoTransform.a == 0 && videoTransform.b == -1.0 && videoTransform.c == 1.0 && videoTransform.d == 0) {

assetOrientation = UIImageOrientationLeft;

isPortrait = YES;

}

if (videoTransform.a == 1.0 && videoTransform.b == 0 && videoTransform.c == 0 && videoTransform.d == 1.0) {

assetOrientation = UIImageOrientationUp;

}

if (videoTransform.a == -1.0 && videoTransform.b == 0 && videoTransform.c == 0 && videoTransform.d == -1.0) {

assetOrientation = UIImageOrientationDown;

}

//根据视频中的naturalSize及获取到的视频旋转角度是否是竖屏来决定输出的视频图层的横竖屏

CGSize naturalSize;

if(assetOrientation){

naturalSize = CGSizeMake(track.naturalSize.height, track.naturalSize.width);

} else {

naturalSize = track.naturalSize;

}

return naturalSize;

}

#pragma mark - 获取Documents目录

-(NSString *)dirDoc{

return [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) firstObject];

}

#pragma mark - 播放导出的视频

-(void)playVideoWithUrl:(NSURL *)url{

AVPlayerViewController *playerViewController = [[AVPlayerViewController alloc]init];

playerViewController.player = [[AVPlayer alloc]initWithURL:url];

playerViewController.view.frame = self.view.frame;

playerViewController.view.layer.backgroundColor = [UIColor redColor].CGColor;

[playerViewController.player play];

[self presentViewController:playerViewController animated:YES completion:nil];

}

@end![图片[2]-AVFoundation – 将多个媒体合并(四) – 不同分辨率媒体合成并自定义分辨率-猿说编程](https://www.codersrc.com/wp-content/uploads/2021/09/cf97212ca3207e9-1024x273.png)

合并后的结果如下:

四.猜你喜欢

- AVAsset 加载媒体

- AVAssetTrack 获取视频 音频信息

- AVMetadataItem 获取媒体属性元数据

- AVAssetImageGenerator 截图

- AVAssetImageGenerator 获取多帧图片

- AVAssetExportSession 裁剪/转码

- AVPlayer 播放视频

- AVPlayerItem 管理资源对象

- AVPlayerLayer 显示视频

- AVQueuePlayer 播放多个媒体文件

- AVComposition AVMutableComposition 将多个媒体合并

- AVVideoComposition AVMutableVideoComposition 管理所有视频轨道

- AVCompositionTrack AVMutableCompositionTrack 添加移除缩放媒体音视频轨道信息

- AVAssetTrackSegment 不可变轨道片段

- AVCompositionTrackSegment 可变轨道片段

- AVVideoCompositionInstruction AVMutableVideoCompositionInstruction 操作指令

- AVMutableVideoCompositionLayerInstruction 视频轨道操作指令

- AVFoundation – 将多个媒体合并(二) – 一个轨道多个视频无缝衔接

- AVFoundation – 将多个媒体合并(三) – 多个轨道,每个轨道对应一个单独的音频或者视频

- AVFoundation – 将多个媒体合并(四) – 不同分辨率媒体合成并自定义分辨率

ChatGPT 3.5 国内中文镜像站免费使用啦

![模拟真人鼠标轨迹算法(支持C++/Python/易语言)[鼠标轨迹API简介]-猿说编程](https://winsdk.cn/wp-content/uploads/2024/11/image-3.png)

暂无评论内容